GogoTraining conducted a webinar on Mar 15, where Dr. Suzanne Van Hove discussed several nuances of ITIL Practitioner. She explained in detail about the ITIL Practitioner course, the concepts of IT Service Management, and why you need the Practitioner. She also answered some frequently asked questions related to ITIL Practitioner.

Key takeaways from the seminar:

- Students will need to understand the following terms & concepts:

- What is Adopt & Adapt

- Service Value,

- Who is or can be the Customer

- What is Effective vs. Efficient,

- VOCR (Value, Outcome, Costs, Risks)

- The importance of Communication, Metrics and Organizational Change Management to the Service Management consultant

- The need for and use of an improvement framework

A Peek Inside the ITIL Practitioner Exam

Passing the ITIL Practitioner exam is all about applying the concepts. 70-80% of the questions in the ITIL exam are application-based while remaining are based on theories. Thus, you should spend a majority of your preparation time (70-80%) on doing exercises to strengthen your ability to apply theoretical knowledge.

Most of the exercises in ITIL Practitioner training are based on case studies. There will be a fictitious company and a hypothetical situation. You need to apply the ITIL concepts and identify key elements in order to implement the proper solution within the given situational context. Only through the proper implementation of the concepts can you solve the problems correctly.

The ITIL Practitioner course has several advantages such as helping you gain practical skills, boosting your self-confidence and teaching you about the environment in which Service Management decisions are made within an organization. The course also helps you become an internal or external specialist and utilize the skills learned to provide expert professional advice.

Frequently Asked Questions

Question: Are exam questions similar to the exercises in the course?

Answer: No. The exercises are designed to reinforce the principles. Since the exam questions are based on a particular case, you need to have a tremendous understanding of the concepts and their applications to answer them. However, practicing the exercises will help you do better in the final exam.

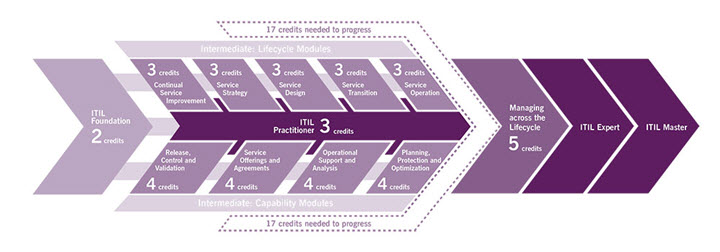

Question: When can I take the ITIL Practitioner

Answer: ITIL Foundation is mandatory for taking ITIL Practitioner exam. Also, it is recommended that you should take at least take one or two intermediate classes before attempting ITIL Practitioner so that you develop the understanding of the context and critical ITSM concepts.

Question: How difficult would it be for experienced ITSM professionals if they take the Practitioner exam without undergoing a training program?

Answer: It will be difficult as it uses a specific language and you need to write ITIL Practitioner exam in a particular atmosphere. So, you will need to study the course extensively and familiarize yourself with all the core concepts before taking the exam.

Question: How much studying do I need to pass the exam?

Answer: It depends on the individual. There are many concepts which are subtle while others are quite dynamic. You need to read the course text thoroughly and practice the activities given in the book. If you have done the ITIL CSI intermediate course, it will help you in the Practitioner exam preparation. You may also highlight the key concepts in the book so that you can refer to them quickly during the exam.

Question: Should I take any particular Intermediate Class before attempting the ITIL Practitioner exam?

Answer: It would be good to take one or two intermediate courses before you take ITIL Practitioner. If you have not taken any we recommend CSI or Service Strategy.

Question: How is ITIL Practitioner different from ITIL Expert?

Answer: ITIL Expert is very different from ITIL Practitioner regarding content as well the context. The Expert doesn’t deal with the soft skills and still requires you to memorize the concepts even though it is more practical than the Intermediates. ITIL Practitioner is more hands-on and based on your application of concepts in different contexts.

Conclusion

You need ITIL Practitioner so that you learn the skills necessary to lead an Adopt & Adapt initiative within your organization. ITIL Practitioner is significantly different from the other ITIL courses as in the Practitioner your focus is not on memorizing new knowledge but, synthesizing what you already have and applying it to real-life situations. Active participation in course exercises, as well as focused study, will be critical to pass this difficult exam.

If you have any questions to Dr. Van Hove, please send them to customerservice@gogotraining.com.